Over at Inside Higher Ed, Stephanie Lee, in Reputation Without Rigor, looks at the methodology behind the US News peer assessment survey. Inside Higher Ed obtained the peer assessment survey form submitted by 48 of the top100 public universities in 2009 US News university rankings. While she found some gaming, some “major oddities,” most respondents gave “honest, if imperfect” responses. Her overall conclusion:

the reputational survey is subject to problems, such as haphazard responses and apathetic respondents, that add to the lingering questions about its legitimacy.

Some of the persons who responded on behalf of universities complained of the difficulty of giving an overall evaluation of a university, as opposed to particular programs. Presumably, that’s less of a problem for the law-school survey. The real problem was time:

Ten hours. With 260-some colleges, giving each two or three minutes of attention, that’s how long it would take to adequately respond to the U.S. News survey, estimates Daniel M. Fogel, president of the University of Vermont. And he says that’s time no one like him can afford to spend.

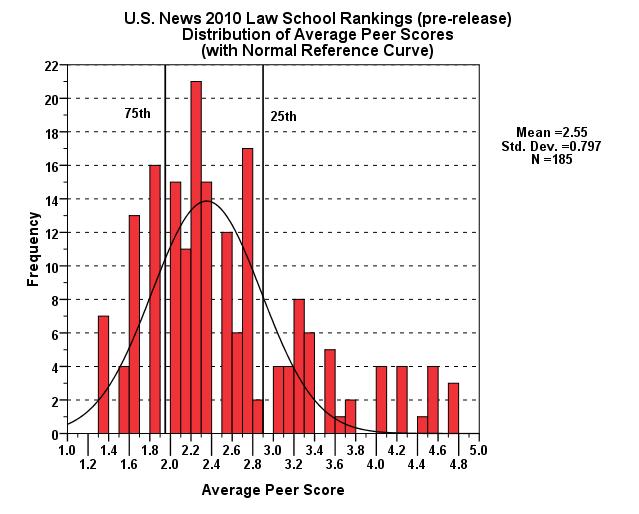

With the number of law schools at 200 or so (and growing!), the time problem also affects the law-school peer assessment surveys.

Gary Rosin